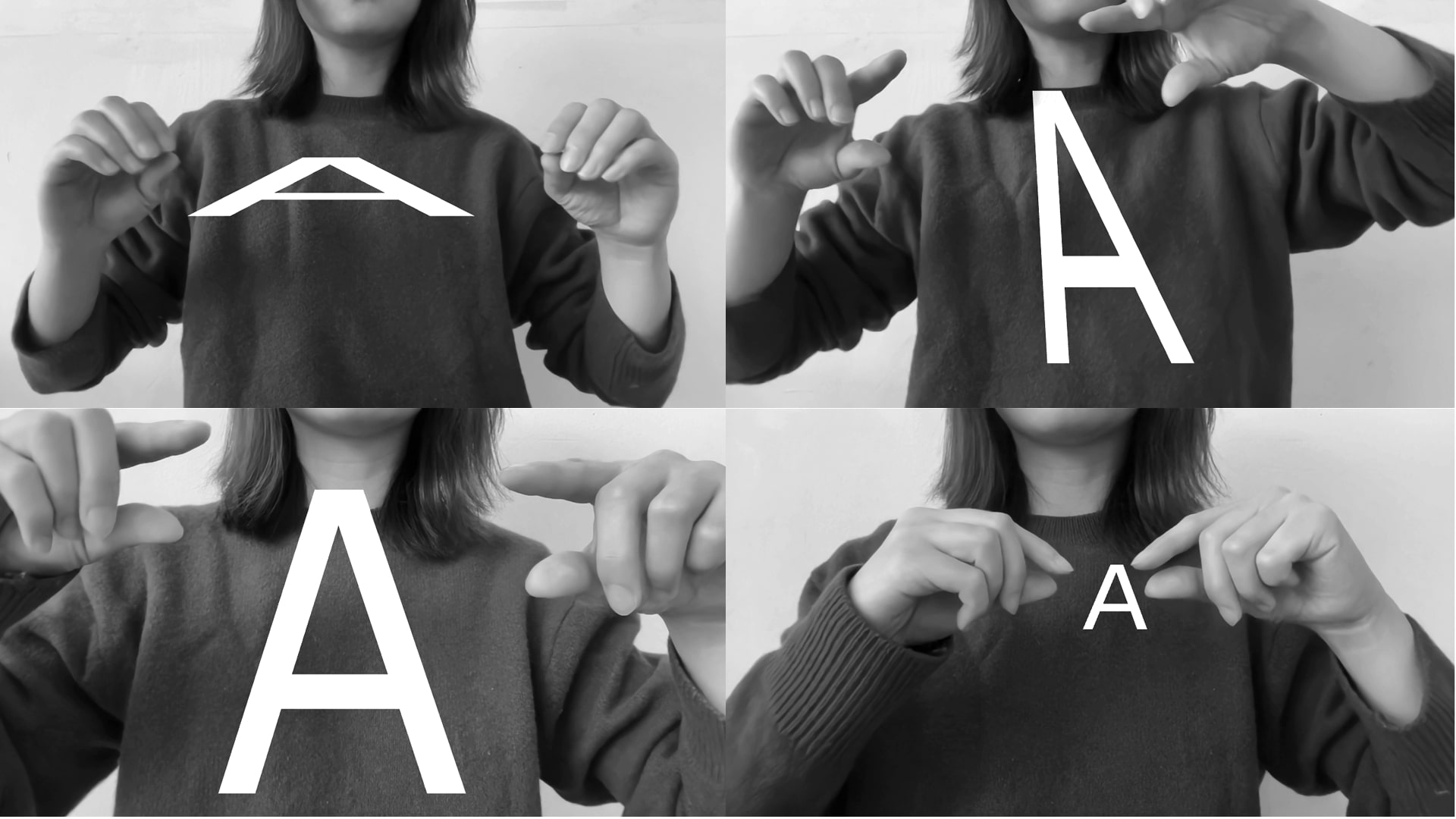

"Gesture GUI" is an gesture UI system that transforms human movement into a responsive interface.

By using gestures as input, users can scale, rotate, re-position 3D objects or even camera in real time across platforms such as Unity and TouchDesigner.

It invites a more embodied interaction with technology by reimagines the body as a controller, turning space into canvas.

Available on Github